Big data is rarely neat and tidy. In our experience, big data starts as a mess, and our first task is to bring order to hundreds of gigabytes of information.

In this blog post, we'll explore things to think about when preparing big data.

So, what exactly makes the data messy? Unfortunately, in the world of big data, it is often a combination of many things. This list should give you some ideas of what to look for, and how to go about fixing them.

Database format

Sales keep all of their data in Salesforce, and the marketing boffins have their customer segmentation analysis stored in SAS, the finance crew has everything they need in SAP...and you've been told, in Tim Gunn fashion, to "make it work."

Before business intelligence and data-prep tools like Tableau and Alteryx came along, making it work was an arduous task. Frequently, the only solution to get multiple data files talking was to save them into a common format and then merge the files.

While this approach is do-able, it is time-consuming, inefficient, and has little recourse if dynamic data is part of the process.

These days, big data tools can work with multiple files in their native formats. The data can be unioned and manipulated as if it were one giant set of information while retaining links to the individual source files. This non-destructive approach allows for much experimentation without fear of destroying the original data.

Size of data files

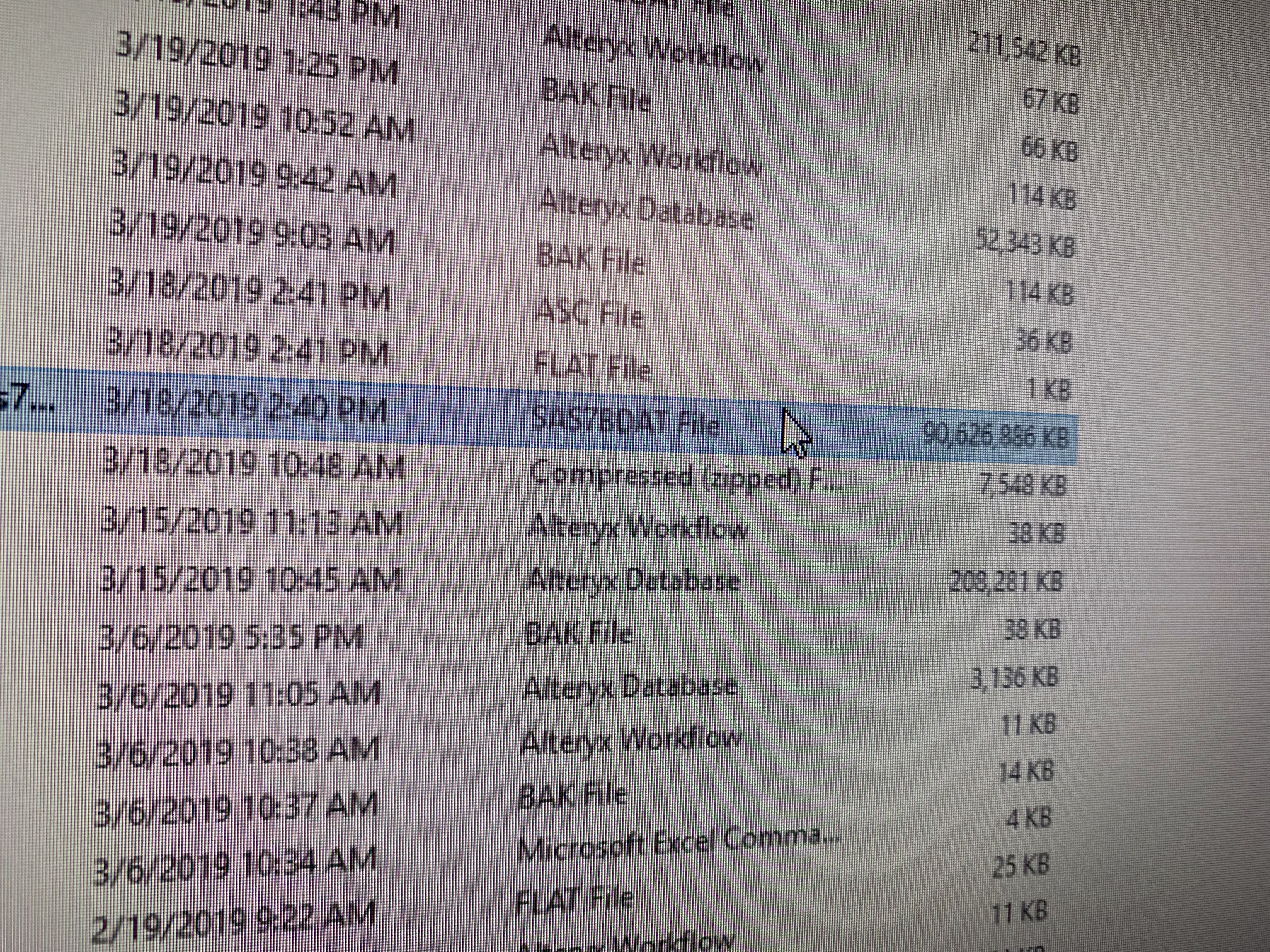

One of the frustrating problems with big data is that the file size is, well, big! We often deal with data that are tens of gigabytes. In fact, check out the screenshot at the top of this post - that's a 90gb file! Just try opening a 90gb file in Access or Excel - it's likely your computer will freeze-up or just plain crash. Of course, one solution might be to invest in some serious hardware, but even our liquid-cooled 32gb RAM, quad-core i7 struggles in dealing with individual big data files. Multiple sets of data - no chance.

Tools designed specifically for managing and mangling big data allow you to load and preview the data in seconds. Yes - seconds. Alteryx, for example, will let you link to multiple huge datasets all day long. The key - it is simply connecting to a file but not importing or processing it. As a user, you can then go in and setup manipulations and code and then tell the program to process that data. So, the only time your system uses resources is when the process runs.

In all fairness, this processing can take some time - especially if you are joining lots of large files, and then executing various formulas and manipulations. Pro-tip: if you're testing your workflows in Alteryx, work with a small test sample of 20,000 records before you unleash yourself on all 20 million (this is a simple instruction in Alteryx - no need to create a separate file of 20,000 records!). Trust us; this will save you so much time and frustration!

Missing data

Missing data is the absolute bane of our lives. However, the reality is that different data sources may come in varying levels of completeness. You can't find patterns in data if that data doesn't exist in the first place, but you can minimize the amount of missing data by employing a bit of detective work and thinking outside the box.Being able to see what information you have across multiple datasets is essential. We've had much luck finding the missing data in other internal files, and we use Alteryx to redirect that information to where it needs to go.

Sometimes we have to use logic to fill our data gaps. The missing data could be a logical function of other information that is present. For example, we can use IF/THEN statements to create criteria which, if satisfied, populates our missing data with the correct information.

We frequently run into issues where the missing data is present, and it's hidden among garbled nonsense in another field. For example:

20124058563211>0210O5454542Y,PHAT

Let's say our missing data is a customer phone number, but all we have is order data. By putting together some detective work, we uncover the order field consists of multiple data points.

Year of order - phone number - month - day - order number - product category.

By using a pattern-based parsing system, like RegEx in Alteryx, we can automate a solution which extracts the phone number (405-856-3211). RegEx is capable of recognizing patterns even if the information is jumbled, unlike a delimited process which relies on the data either being in a fixed position or separated by a nominated delimiter such as a comma or a pipe.

20124058563211>0210O5454542Y,PHAT

O25236564334554L,PHAT20124058563211>0210

Above we have our original example, and below we have another way order data could be stored in the same dataset. The information is now out of order, but since RegEx recognizes patterns in data, it will still be able to extract the phone number. RegEx solutions kind of work like this:

- Anything that starts with an" O," has a sequence of numbers of any length, and ends in a capital letter is an Order Number.

- Anything that starts with a comma, and is followed by a sequence of four letters is a product category.

- Any series of 4 numbers that are in the range of 2000-2019 is always going to be the year of order.

- Any 10-digit number that follows the year of the order will always be the phone number.

The RegEx trick has helped us extract otherwise missing data from many projects!

Dirty data

We mentioned large file sizes come into play with big data. The larger the file, the longer the time it will take to process.Even before you blend it with other data, a file with millions of records and hundreds of fields is always going to be massive - that's just the nature of big data. However, a lot of big data suffers from bloat - files are bigger than they should be.

For example, the system may read a 10-digit customer lifestyle code as a 400 digit number because the field contains a significant amount of white space. Alternatively, a string field may default to a length of 100,000 characters when it only needs to be set to a maximum of 40.

Big data tools frequently contain cleansing options which can eliminate the wasted space and set each field to its optimum length and type. Recently we reduced a 380gb file into 90gb by eliminating invisible white space and setting appropriate field lengths. 190gb of space was nothing!

Let us know if you have any other pro-tips when it comes to preparing big data sets. Hey, if you're having problems with your big data let us know, too. We'd love to help!

Posted by EvolveKev

Kevin is all about research. Qualitative, quantitative, UX, you name it. When he's not researching, he's to be found laying down beats in his studio and hanging out with his dogs (and girlfriend). Woof.